Recent

Fixing Claude Code slowness in WSL

If you’re like me, you probably love the Claude models. But when you tried Anthropic’s Claude Code CLI on Windows (specifically inside WSL, where most of us prefer to do our coding), the experience felt completely broken.

It was borderline unusable. We’re talking freezing for several seconds at startup, hanging before every / command, and even stuttering while typing. Since coding with AI usually works better in a Linux environment compared to PowerShell (where AI tooling compatibility can be a bit hit or miss), feeling blocked from using WSL smoothly with Claude Code was a huge turn-off.

Well, good news: there is a ridiculously easy fix!

Onboarding AI into your codebase

··13 mins

You build a project. It’s clean, well-structured, cohesive. You know every corner of it. Then life happens: the project moves to a different team for day-to-day maintenance. They bring their own style, their own habits. Things start to drift. Not dramatically, just… gradually. The codebase gets a little messier with every handover.

Now drop an AI agent into that codebase. Same story, amplified. It doesn’t know the history, the constraints, the “we do it this way because of that incident three years ago”. Every change it makes risks introducing side effects it can’t even reason about.

You’ve probably experienced this already: you ask AI to do something, it produces code that looks plausible but breaks your style, misses your constraints, creates subtle bugs. You fix it, try again, hit the same wall. And you start to wonder if this AI thing is all hype.

It’s not. But your codebase isn’t ready for it yet.

The right way to handle changing configuration in ASP.NET Core middleware

··7 mins

You’ve got a middleware. It reads a comma-separated list of allowed hosts from configuration. Or maybe it loads a dictionary of route mappings, or parses a set of feature flags into a lookup table. The config value can change at runtime and you need to pre-process it into something your middleware can actually use on every request.

How hard can it be? 🤷

Tempted to run a local coding model on your gaming rig? Don't.

··7 mins

Lately, I’ve successfully self-hosted a decent private chatbot and even run a near real-time voice-to-text model locally. So I thought: why not try running a local coding model? I have a pretty decent gaming rig, so it should be able to handle it, right?

Well, it turns out: not really. I spent the whole weekend trying to set up a local coding model and ran into a pile of issues. The models are huge and hungry. Even with my gaming rig, I was barely able to get it running, and it was extremely slow. I ended up dealing with more technical issues than coding tasks.

Choosing the right GitHub Copilot model for the job

··6 mins

One of the most common questions I get asked is: “Which AI model should I be using with GitHub Copilot?”

Open GitHub Copilot today and you are greeted with a dropdown menu that looks like a wine list. Sonnet? Gemini? Opus? GPT-5? 🤯 It used to be simple, but now it can be paralysing.

Which one is best? It depends on what you are trying to achieve, how complex the task is, and how you want to manage your credit usage.

My rule of thumb is simple:

Start cheap to think and iterate. Pay for certainty when the blast radius is big.

How AI ruined voice assistants: losing the basics in the race for more

··3 mins

Voice assistants used to be simple, reliable helpers. Well, maybe not truly reliable. They weren’t perfect, but for years, you could count on them to set a reminder, add a calendar event, or play your favourite playlist. They quietly made life easier, even if they never lived up to the sci-fi hype.

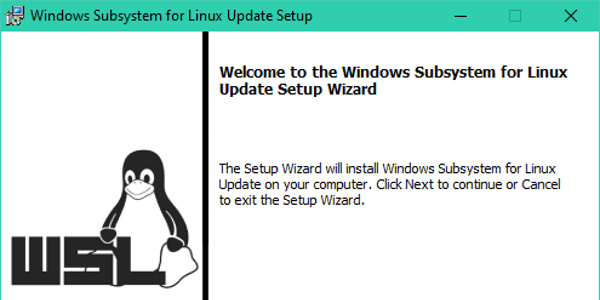

WSL2 with Ubuntu 20.04 step-by-step upgrade: getting started on Windows 10 May 2020 Update

Now that the Windows 10 May 2020 Update is finally available to everyone, without requiring to join the Windows Insiders program and install preview versions, it’s finally time to embrace WSL2.

WSL2 is the second iteration of the Windows Subsystem for Linux which finally allows running linux virtualized inside Windows. This new version brings real virtualization using a real linux kernel, but, compared to a traditional virtual machine, it runs on a lightweight hypervisor getting close to bare-metal performance.

This will also be used by Docker under the hood, boosting the start-up time and general performance of our containers. Sweet! Furthermore, with WSL2, VPN connections automatically propagate to linux correctly, which is great news when working from home.

So, let’s stop talking and get to update our Ubuntu 18.04 WSL environment to Ubuntu 20.04 running on WSL2.

How to test logging when using Microsoft.Extensions.Logging

Logs are a key element for diagnosing, monitoring or auditing the application’s behaviour, so if you are either a library author or you are developing an application, it is important to ensure that the right logs are generated.

Would it be nice if there was an easy solution to write tests for it? Let’s start a journey through the best approaches to tests logs when using Microsoft.Extensions.Logging.

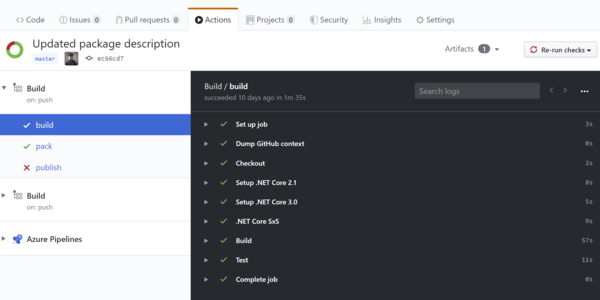

Build a .NET library with GitHub Actions

GitHub Actions are a great free tool to have continuous integration of your opensource .NET library for free, directly into GitHub, saving you from setting up other tools and linking accounts. Setting it up can feel a daunting task but, if you follow this guide you are going to be able to set it up in 10 minutes!

Should I use global.json?

I often get asked if it is better to have a global.json in a .NET project (not necessarily .NET Core) to define a specific .NET Core SDK version and, unfortunately, if you need a short answer you will get from me the typical engineer answer: it depends! Here is the full answer so you can decide what suits best for your needs.